How can I use a Large Language Model (LLM) in my workflow?

Spojit empowers businesses to automate and optimize complex workflows by seamlessly integrating Large Language Models (LLMs) into their operations, unlocking a wide range of applications across the organization. From automating customer support and sales processes to generating high-quality content and analyzing market trends, Spojit's flexible platform enables businesses to leverage LLMs in countless ways to drive growth and efficiency. Whether you're looking to improve internal workflows or enhance customer experiences, Spojit provides the tools and expertise needed to harness the full potential of AI for business success.

Quickstart Guide

This quickstart guide will show you a simple example of getting a LLM running in your workflow. We will connect to an external API and translate some text from the response into a different language. It assumes that you already know how to create a workflow, add and connect a service, map data to transform your data and run a workflow. If you are just starting or need a refresher head over to our getting started guide.

Add services

We'll start by adding a JSON REST service to the canvas with the following configuration:

| Method | API URL |

|---|---|

get |

https://json-placeholder.mock.beeceptor.com/posts/1 |

When the service is run it will return the following JSON object:

{

"userId": 1,

"id": 1,

"title": "Introduction to Artificial Intelligence",

"body": "Learn the basics of Artificial Intelligence and its applications in various industries.",

"link": "https://example.com/article1",

"comment_count": 8

}

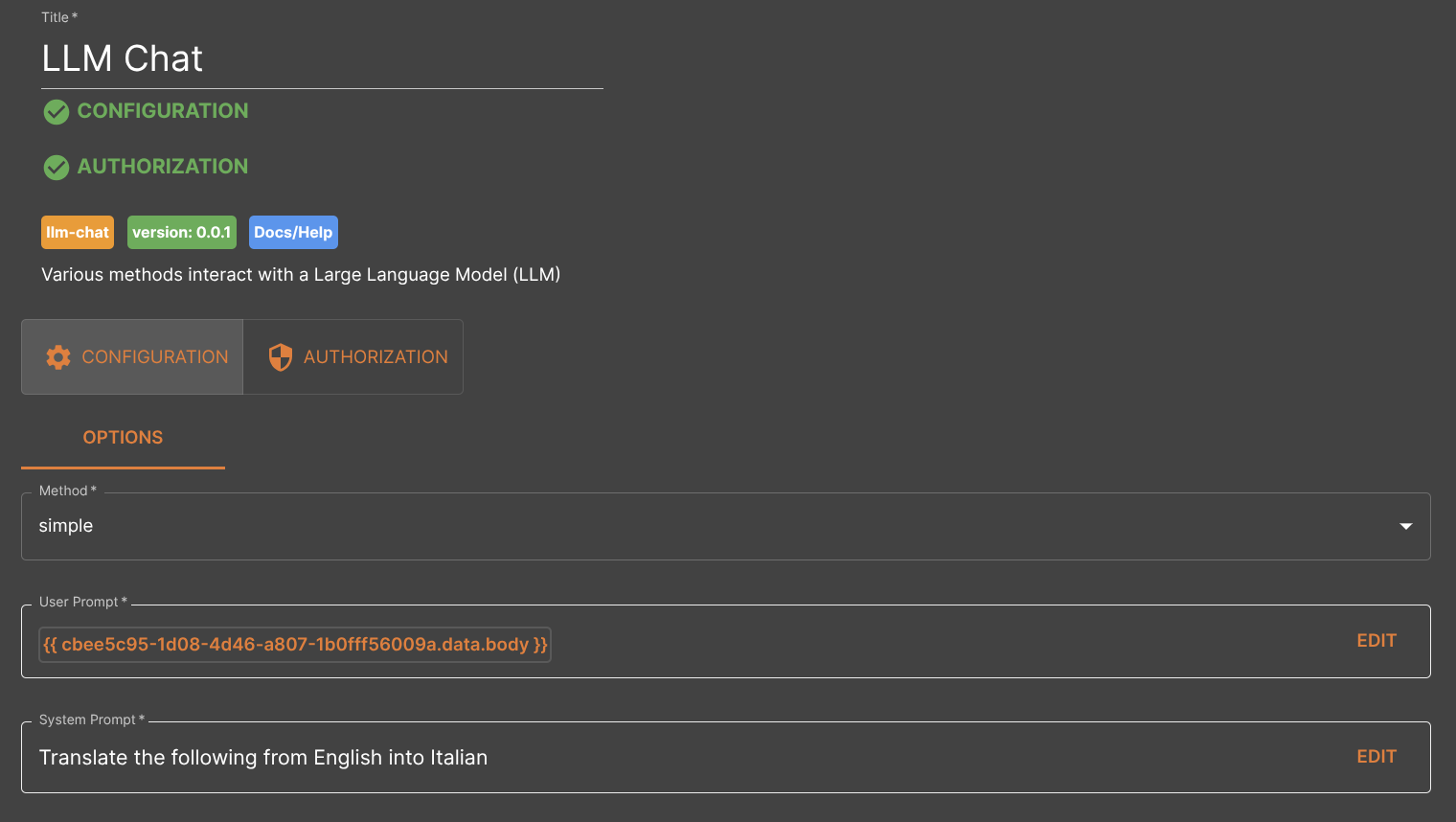

Next we are going to add the LLM Chat service to the canvas and link it to the RESET service. Add the following configuration to the LLM Chat service:

| Method | System Prompt | User Prompt |

|---|---|---|

simple |

Translate the following from English into Italian | Map the body field from the REST service |

Choose a provider

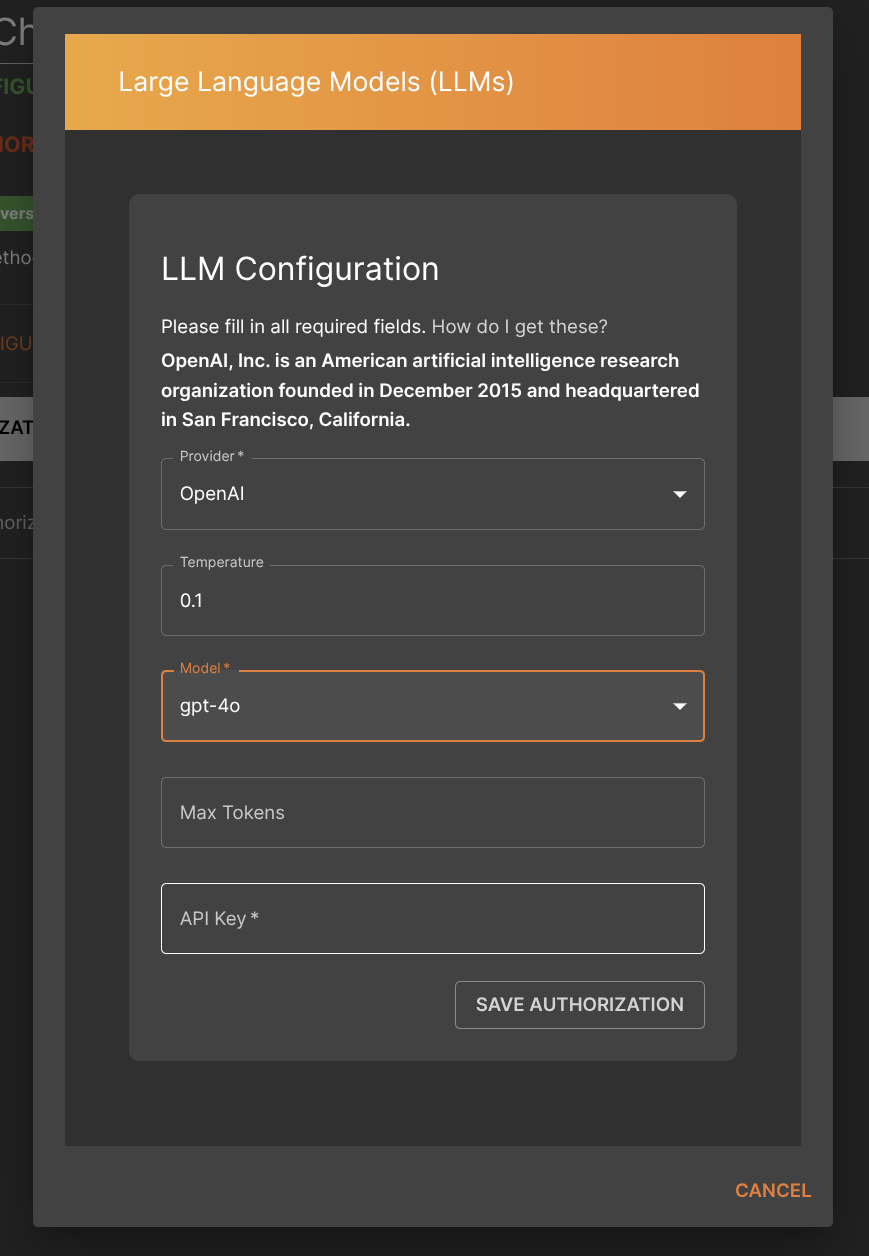

Next go to the LLM Chat service and click on the Authorization section. Click on the "Add New Authorization" button and a popup will appear.

Select the Provider and Model and enter your API Key:

The following providers can be used in our platform:

| Provider | Description | Example Models |

|---|---|---|

| Anthropic | Anthropic is an AI safety and research company based in San Francisco. | claude-3-7-sonnet-20250219 |

| DeepSeek | DeepSeek AI is an AI research company focused on cost-efficient, high-performance language models. | deepseek-reasoner |

| Groq | Groq, Inc. is an American artificial intelligence company that builds an AI accelerator application-specific integrated circuit. | llama-3.3-70b-versatile |

| MistralAI | Mistral AI SAS is a French artificial intelligence startup, headquartered in Paris. It specializes in open-weight large language models. | mistral-small |

| OpenAI | OpenAI, Inc. is an American artificial intelligence research organization founded in December 2015 and headquartered in San Francisco, California. | gpt-4o, gpt-4o-mini |

| VertexAI | VertexAI (Express Mode) is a machine learning (ML) platform that lets you train and deploy ML models and AI applications, and customize large language models (LLMs) for use in your AI-powered applications. | gemini-2.0-flash-exp |

Choose the best provider and model for your use case.

Run your workflow

Save your workflow and click on Run Now to run the workflow and you should get an output similar to the following:

{

"data": "Impara i fondamenti dell'Intelligenza Artificiale e le sue applicazioni in diversi settori industriali.",

"metadata": {

"usage": {

"input_tokens": 35,

"output_tokens": 28,

"total_tokens": 63

}

}

}

The response from the model will be in the data field while the metadata will have the token usage for the request.

All of our resouces are currently in our developer documentation, find out more here. Contact us if you would like any more information.